Nvidia is playing some serious games.

When Jensen Huang and his two partners founded Nvidia in 1993, there were many more competitors in the graphics chip market than there were in the CPU market, which only had two. Nvidia’s competitors in the gaming market include ATI Technologies, Matrox, S3, Chips & Technology and 3DFX.

A decade later, Nvidia had scrapped every one of them except ATI, which was acquired by AMD in 2006. For much of this century, Nvidia has shifted its focus to powering supercomputers, high-performance computing (HPC) and artificial intelligence in the enterprise with the same technology that renders video games at 4k pixel resolution.

The results of this shift are clear in Nvidia’s financial reports and across the industry. In the most recent quarter, data center revenue reached $3.81 billion, a year-on-year increase of 61%, accounting for 56% of Nvidia’s total revenue. In the latest list of the Top500 supercomputers, 153 are running Nvidia accelerators, compared with only nine from AMD. According to IDC, Nvidia’s share of the enterprise GPU market will be 91.4% in 2021, while AMD will have 8.5%.

Where did it come from? At the beginning of this century, Nvidia realized that the nature of GPUs, floating-point math coprocessors with thousands of cores running in parallel, was well suited for HPC and AI computing. Like 3D graphics, HPC and AI rely heavily on floating point mathematics.

The first step in transforming the business came in 2007, when former Stanford University computer science professor Ian Buck developed CUDA, a C-like language for GPU programming. Video game developers don’t write code for the GPU; they program Microsoft’s DirectX graphics library, which in turn talks to the GPU. CUDA provides an opportunity to code directly to the GPU, just as programmers using C/C code to the CPU.

Fifteen years later, CUDA is taught at hundreds of universities around the world, and Buck is the head of Nvidia’s AI efforts. CUDA allows developers to make GPU-specific applications – something that wasn’t possible before – but it also locks them into the Nvidia platform because CUDA is not easily portable.

Few companies are in both the consumer and enterprise space. HP split itself in two to better serve these markets. Manuvir Das, Nvidia’s vice president of enterprise computing, said the company is “absolutely focused on enterprise companies today. Of course, we are also a gaming company entity. That’s not going to change.”

Both the gaming and enterprise sides of the company use the same GPU architecture, but the company treats the two businesses as separate entities. “So in that sense, we’re almost two companies in one. It’s one architecture, but two very different routes to market, customer segments, use cases, all of that,” he said.

Das added that Nvidia has a range of GPUs that have different capabilities depending on the target market. Enterprise GPUs have a transformation engine that can perform functions such as natural language processing that gaming GPUs don’t have.

Addison Snell, principal researcher and CEO of Intersect360 Research, said Nvidia is currently straddling both markets very well. However, “the growth in GPU computing has really put them all in on enterprise computing and AI. And I think they’re primarily serving the hyperscale market there now, which is where most of the AI spending is coming from,” Snell said.

Anshel Sag, principal analyst at Moor Insights & Strategy, agrees. “I feel like a lot of the company’s efforts are very focused on today’s business, but I still think there’s still a lot of game in its overall branding,” he said.

Sag believes Nvidia’s biggest room for improvement lies in mobile technology, where it is second only to Qualcomm. “I think they’re very weak in mobile, and mobile is not limited to smartphones but includes handheld gaming and AR/VR applications. I think that puts them on the edge and I think they’re going to face competition in the future,” Sag said.

According to Snell, Nvidia continues to downplay HPC in favor of AI and focus on hyperscale companies, relying on cloud computing as the delivery mechanism for all types of enterprise computing.

“This demonstrates Nvidia’s goal to become a complete solutions provider for hyperscale and cloud, while diluting its efforts as a component provider to end users through traditional server OEMs,” Snell said.

Compete with its partners

Nvidia operates differently than other chip giants because it competes with OEM partners. This caused at least one public outcry.

In September, peripheral manufacturer EVGA announced that it would no longer produce Nvidia cards. EVGA is one of the top vendors in the market, with graphics cards accounting for 80% of its revenue. So they had to be very angry with Nvidia to give up 80% of its revenue.

EVGA CEO Andy Han listed a number of grievances with Nvidia, the most important of which is its competition with Nvidia. Nvidia makes graphics cards and sells them to consumers under the Founder’s Edition brand, while AMD and Intel do little or nothing.

Additionally, Nvidia’s line of graphics cards are priced lower than licensees’ graphics cards. So Nvidia is not only competing with its licensees, but also undercutting their products.

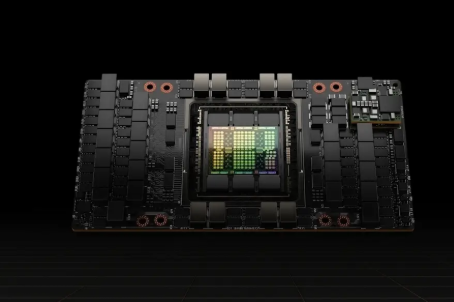

Nvidia is doing the same thing on the enterprise side, selling DGX server units (rack-mounted servers with eight A100 GPUs) to compete with OEM partners like HPE and Supermicro. Das defended the practice.

“For us, DGX has always been an AI innovation tool that we test on a lot of projects,” he said, adding that building DGX servers gives Nvidia the opportunity to get rid of bugs in the system and transfer knowledge to Passed to OEM. “Our partnership with DGX gives OEMs a big head start in getting their systems ready. So it’s really an enabler for them.”

But both Snell and Sag believe Nvidia should not compete with its partners. “I’m very skeptical of this tactic,” Snell said. “It defeats the purpose of not competing with your own customers. If I were one of the major server OEMs, I wouldn’t like the idea of Nvidia now acting as a system provider and excluding me.”

“I think those [DGX] systems do compete to some extent with what their partners are offering. But having said that, I also think Nvidia is not necessarily going to support and service those systems the same way their partners do. And I think that’s an important part of the enterprise solution that’s not really on the consumer side,” Sag said.

AMD, Intel strengthen their GPU technology

So who will knock Nvidia off its throne? Throughout the history of Silicon Valley, most companies that have experienced major declines have not been knocked down by competitors, but have fallen apart from within.

IBM and Apple in the 1990s, Sun Microsystems, SGI, Novell, and now Facebook all failed or nearly failed because of poor management and poor decision-making, not because someone else came along and eliminated them.

Nvidia has had product misfires along the way, but it’s always quickly corrected course with next-generation chips. And it’s had pretty much the same executive management team since the beginning, and they haven’t made as many mistakes.

One thing that has helped Nvidia achieve such a dominant position is that it owns its own market. AMD has struggled for years, while Intel has made several failed attempts at developing GPUs.

But that’s not the case anymore. AMD has completely bounced back, and now its GPU technology, Instinct, is powering Frontier, the world’s fastest supercomputer, giving it bragging rights. Intel’s Xe GPU architecture appears to be finally on track, appearing in the upcoming Ponte Vecchio enterprise GPU.

But if a company asked Das to spy on him, he wouldn’t admit it. “I think the real competition we’re seeing is basically how do we [support] workloads that already exist that are running non-accelerated?” he asked.